Don’t Build An AI Safety Movement

Once again, the field is summoning spirits it cannot control

Safety advocates are about to change the AI policy debate for the worse. Faced with political adversity, few recent policy wins, and a perceived lack of obvious paths to policy victory, the movement yearns for a different way forward. One school of thought is growing in popularity: to create political incentive to get serious about safety policy, one must ‘build a movement’. That is, one must create widespread salience of AI safety topics and channel it into an organised constituency that puts pressure on policymakers.

Recent weeks are seeing more and more signs of efforts to build a popular movement. In two weeks, AI safety progenitors Eliezer Yudkowsky and Nate Soares are publishing a general-audience book to shore up public awareness and support — with a media tour to boot, I’m sure. PauseAI’s campaigns are growing in popularity and ecosystem support, with a recent UK-based swipe at Google DeepMind drawing national headlines. And successful safety career accelerator MATS is now also in the business of funneling young talent into attempts to build a movement. Now, these efforts are in their very early stages; and might still just stumble on their own. But they point to a broader motivation — one that’s worth seriously discussing now.

I think the push toward building a popular AI safety movement is serious, well-funded, set up to gain traction – and quite likely to do more harm than good. The field of AI safety as well as the broader field of frontier AI policy should have serious reservations about it. On safetyist merits, it fails both the elite-focused and the popular strategy: It exposes reasonable AI safety organisations to association with perceived crackpot protesters. And it hurts existing popular support for AI safety measures by linking that support to astroturfed movement-building.

And on broader terms, I think this movement is likely to be captured by reductive overtones and ultimately muddy the policy conversation through untractable or misguided proposals. Just as much as I worry about the AI policy debate derailing by its inevitable politisation through flashpoints like job impacts, I worry about the safety movement forcefully introducing the same kind of chaos in an attempt to flip the gameboard.

The Makings of a Movement?

Let’s start by looking at the kind of movement that’s likely to be built here. Many roads lead safety advocates of different stripes to the movement-building conclusion. Some are guided by the notion that the public already deeply cares about AI safety, and its preferences must be channeled. Some suppose that the public doesn’t, but really ought to care about AI safety, and so it must be taught and guided. And some simply think that the past elite-focused strategy has run its course, and is failing in the face of industry lobbying and adverse political sentiment in the current US administration. Their takeaways converge: AI safety needs a political constituency. And because times are wasting, they conclude it’s high time to build up that constituency.

Working from the underlying goals, we can intuit some features of the playbook: Familiarise sympathetic constituencies (i.e. people with reservations about AI) with the most salient arguments for focusing on the safety angle specifically; unite them in the context of local communities and global information ecosystems; expand on that reach and group through public-facing content. Then, point to that community as a latent constituency for general political proof, and mobilise as needed in the context of specific policy debate. The already-public instances I’ve quoted above make for a reasonable pipeline along these lines.

The motivations and constituencies that such a movement would have to channel are very heterogenous. Quite clearly, the general political support for AI regulation is not primarily motivated by the safety canon. It seems unlikely that you can build a bona fide movement just from the same complicated arguments on risks from loss-of-control and misuse. It’s not for a lack of Time magazine covers that those have not caught on as a political platform. Instead, a successful movement-building effort likely taps into existing sentiment around salient political values – by drawing on labor issues and fears of job displacement; on environmental issues, on anti-tech sentiment, on fear of inequality, on past shocks from digitisation, and so on.

For the movement to galvanise existing political support, its message needs to be compatible with at least some of these points. I trust that people likely to be involved will refrain from indulging in blatant misinformation, but they’ll have to paint with a fairly broad brush – i.e. you will probably not be able to engage and build a movement around sophisticated and clever policy angles as much as around very simple claims. Naming your big stab at public engagement ‘If Anyone Builds It, Everyone Dies’ is perhaps a good first indicator of how that will go.

Building and focusing a movement from these components around this message will be very difficult. I think a generally useful abstraction of movements is: People commit to vague ideas and specific asks, and they measure their movement’s success and failure by its ability to track these asks. That instills serious path dependency: Changing goals can often feel like betrayal, discussions about pivots often lead to splintering and infighting. The sticking power of a movement, and its related ability to stay on-message and effective, depends on how well its ideas and asks are suited to continue guiding its actions. AI safety is ill-suited to do so: neither its policy asks nor its ideas can capture an audience well.

Changing Policy Asks

On policy, the issue is: If the ‘AI safety movement’ wants to be an effective tool for supporting AI safety policy, it needs to ask for actually useful policies – which means it’ll have to keep changing its tune. That’s because we don’t actually know all that much about ultimately useful AI safety policy just yet. I’ve argued as much in greater detail before - the short version is: Specifics of the paradigm leading to dangerous AI systems keep changing, and so do the policy levers that address them. Moving parts like the competitiveness of Chinese models, the split between inference and training compute, or the role of pretraining vs post-training for pushing the capability frontier make a big difference for the policies actually required to address safety risks. If you had built a political movement around specific asks – like, say, a pause at GPT-4-level capabilities, or training thresholds along the lines of 10^25 FLOP, it would basically be unworkable today. I’ve written more about this before:

It’s very hard to build a movement around ever-changing policy asks. The AI safety movement is used to a cadre capable and willing to change specific asks quickly. Popular movements cannot do that: they often operate on useful abstractions of the high-level concepts. It’s unrealistic to assume that you’ll get a broad popular movement to operate on a similar level of technical awareness and policy flexibility as a narrow elite movement. Look at the outrageously successful Leave movement for Britain’s exit from the European Union – it did very well focused on a single policy, but in that, it united a very heterogenous crowd of euroskeptics for many different reasons that could not have easily been transferred to another issue – and in fact it hasn’t, if you look at UKIP’s weak numbers in the years after the referendum. The AI safety movement has no overarching policy goal as ambitious as leaving the EU. Quite the opposite: absent specific policy asks, the current AI safety movement has, for now, focused on ‘low-hanging fruit’ like transparency or whistleblower protections. But these don’t have the scale or sound to rally and motivate a movement – you need bigger-ticket items to galvanise.

Intractable Results

But other than concrete policy wins and losses, there’s little change in the world to track as a measure of safety wins. A marked change in the risk of extinction from AGI is not visible anywhere in the world, it doesn’t feel like a win. Some things that look like wins, like advanced AI models no longer being deployed externally, but just confined to internal use in labs, can actually be counterproductive. The quickly changing landscape of required AI safety legislation makes it very hard to keep a movement narrowly focused on policy asks; the lack of observable proxies for decreasing AI risks makes it hard to justify policy pivots by universally agreed metrics. External metrics – say, roadside deaths that allow you to shift from seatbelt laws to automotive design to speed limits to whatever else at will – enable pivots to remain on mission. AI safety has none.

The upshot is: This movement will likely start to drift away from its underlying goal; get stuck on outdated policy asks, optimise for vaguely related vibes, and not do a lot of helpful things at all.

Worse Than Useless?

In the face of this effect, you might ask: Is it enough if the movement just voices general support for ‘safety policy’ and doesn’t track the nuances of the policy conversation in sophisticated detail? Can’t policy organisations then point to that sentiment, argue that it indicates support for their particular policies? Not quite, because any mismatch dramatically weakens policymaker incentive to make useful policy.

Whether its through direct interactions between the movement and policymakers, or through policy organisations pointing at the movement to motivate their asks, the pitch needs to be: If you do what we suggest, the movement and the broader political current it represents will reward that electorally. The harder it is to draw a line between a specific policy ask and the movement’s motivation, the less plausible the pitch becomes; and the easier it is to justify vaguely related but ineffective policies on the same ground instead. There’s a lot of AI policy that works well to respond to ‘people want AI regulation’ but not well to ‘let’s make progress on genuine AI safety’. Any mismatch and vagueness risks more of the former, less of the latter.

To make matters much worse, the lack of sticking power of reasonably safetyist ideas dramatically increases the risk of movement capture. There are much more politically attractive and effective ideas very close to the safetyist agenda that can readily take over the movement — especially in the context of a political environment leading up to what’s shaping up to be a fairly populist 2028 election cycle.

Safety-focused ideas can quickly lose their grasp on the movement they helped build, and give way to these issues. It’s not exactly an uncommon experience to build some movement that leans on popular and populist undercurrents and have it become an everything bagel of populist commitments. General anti-corporate or anti-tech sentiment will be a big part of what motivates people to join the movement, and could well stick around. So could adjacent worries around bias, discrimination, the environment, or general equality. And when movement leadership struggles to find a good safety-focused story for what people should ask and do, it’s easy for these much more tractable broader-brush components of the movement to increasingly determine its direction. You can avoid that via message discipline, but as I’ve argued, the message of a safety movement doesn’t lend itself well to discipline a movement. For a safety advocate, that means you shouldn’t count on the movement remaining very useful for long. For everyone else, it means someone is introducing a chaotic political force into the policy conversation — beware.

Astroturfing & Organic Support

So, maybe this movement fails and doesn’t go so well. From the narrow safetyist view, is that such bad news? Directional support for some regulation is probably still fine, and it’s not like there’s much to lose, right? I don’t think so. A badly-executed safety movement hurts, no matter whether you think the path to successful safety policy runs through popular support or elite outreach.

First, incubating a movement drastically reduces the credibility and political usefulness of existing popular support. That popular support goes a long way toward safety asks today already: it motivates policymakers across the spectrum to espouse safety-adjacent ideas, and it’s been instrumental in one of the few political victories in recent months – the death of a proposed federal moratorium on state AI legislation. Popular support for AI regulation and thereby opposition to this moratorium was channeled through avatars like Steve Bannon and Senator Marsha Blackburn and ultimately convinced 99 senators to strike down the amendment. This worked because policymakers had been convinced that there was genuine, grassroots opposition to the moratorium, and supporters of the moratorium had no good response.

If that episode had been preceded by movement-building efforts in the populist right, I’m not sure the popular support would have had the same weight. Public asks could have been easily identified as being driven by groups with agendas, reducing their credibility and organic appearance. Supporters of the moratorium could have pointed at incubation efforts to discredit this support; noting that waves of calls could be linked to.1 Even as it stands right now, senior administration officials are keeping a watchful eye on AI safety funding streams and how they relate to policy advocacy, readily dismissing much input that can be linked to major funders in the safety space. That dismissal can quickly extend to *all* public support once incubation efforts inevitably become public. Soon, even organic support for AI regulation that would also have existed otherwise will be painted with the same brush, reducing its credibility in the eyes of decisionmakers and ultimately hurting political leverage.

Supporters of the movement-building effort sometimes point out to me that this doesn’t matter: public support is electorally important, no matter who motivated it. But that’s a reductive view: Policymakers read public support as an indicator of overall public sentiment. They’re not trying to get the juicy 5,000 votes in the anti-AI movement, they’re betting it reveals a broader undercurrent with much greater electoral promise. But if the 5,000 anti-AI movers are incubated and placed strategically, policymakers will be much less likely to take them as indicators for an existing political mood.

This is of course bad because it makes the popular movement attempt much more likely to be effective. But it’s particularly bad if you think that even without movement-building efforts, broad public support would likely be somewhat fine. As I’ve written before, I think there are genuinely promising political flashpoints for AI safety – it’ll be hard to leverage them well, but general political support seems unlikely to be the bottleneck. On that view, any movement-building attempt today poses a substantial risk: painting existing popular support with the appearance of being astroturfed. It’ll be more likely to be dismissed, and political capital will be lost. I think AI safety risks squandering a rare instance of the public already on its side.

Radical Flanks & Expert Advocacy

So much for the popular side of AI safety advocacy. The elite-focused branch of AI safety has its shortcomings, but does a lot of valuable work. Work that continues on despite the sometimes-gloomy mood of safety advocates: in expert advocacy, good research, deep engagement and eminently reasonable negotiation. Expert advocacy would take a hit from a badly executed popular movement.

That’s because anything ‘AI safety’ will get tagged with a movement’s worst excesses. This is for two reasons: First, a lot of the AI safety discussion is still not happening out in the public. Only a small expert audience is familiar in some detail with the argument for AI safety, its intellectual history, and how to read its political undercurrents. Activities of a movement will likely be more publicly salient than any more sophisticated discussion in the past, and they’ll inform what people think about AI safety. Just look at the hard hit the AI safety already took after Sam Bankman-Fried’s lost the St Petersburg game. And that was really not a big media story, compared to the kind of coverage visible protests tend to get and need.

And second, AI safety faces real political adversity, and it will inevitably be under attack from its opponents again. A movement creates ammunition: public galvanisation always requires uncomfortable alliances, association with more extreme positions, some overzealous public communications – failures that can quickly be pinned onto safety advocates. Whenever the movement missteps, it will serve as grounds for attacks against the general safety platform. The likely associations through funding sources between the movement and policy organisations make it much worse still – as I’ve written on before:

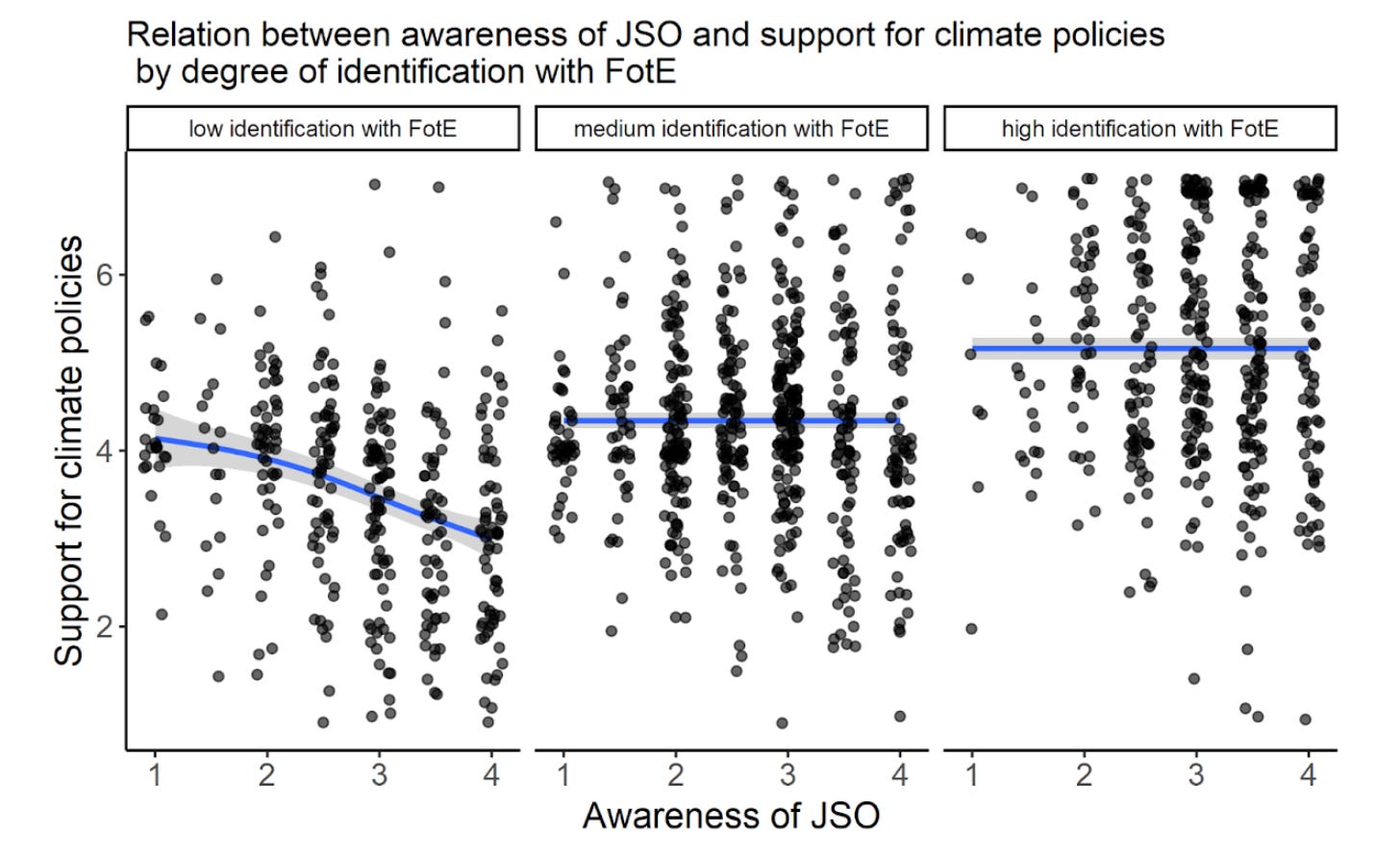

This is not dissimilar to the general discussion of the radical flank effect in the context of e.g. environmental protests. Much research suggests that vocal activist groups can benefit moderates, as long as these moderates are perceived as clearly distinct and cannot be linked to the more radical protests. This degree of distinction is very unlikely to occur in the specific context of proactively incubated safety-focused movements.

Outlook

What to make of all this? I think it points toward rejecting the idea of building a popular movement in support of AI safety altogether – both because of the ‘building’ part that burdens popular support with bad PR, and because of the ‘popular movement’ part that contains risks of capture, reputational backfiring, and harm to the quality of overall AI policy.

In the spirit of constructive input, I think a better version of this banks on narrow, issue-specific or group-specific organisations. As so often, I think Encode is a great example of an effective organisation here. A heterogenous mix of similar organisations that can credibly claim to represent a narrow constituency’s convictions as they relate to AI could be quite helpful. It would have to build on narrow, pet issues – just like Encode has made some substantial progress by pairing its youth advocacy brand with CSAM-focused legislation. Intersections can be labour and work, artists and copyright, and so on. This reduces a couple of failure modes. First, specific, more narrow movements resist the risk of capture and the downsides of a shifting platform. Second, the guilt-by-association effect of a controversial movement on the activities of existing safety organisations is much lower when the movements themselves aren’t identifiable as primarily safety-focused. From policy fight to policy fight, narrower movements still provide cloud cover and political muscle.

A broad, AI-safety focused push for creating and galvanising public support, however, simply strikes me as a mistake. I think funders in the AI safety space would do well to disassociate with movement-building efforts very clearly, lest they expose their more effective projects to guilt by association. For the same reason, credible organisations and accelerators should refrain from engaging in movement-building efforts, especially publicly. And policy organisations in the field should not attempt to ride any waves that upcoming book releases or miscellaneous protests might make.

AI safety has two things going for it at the moment: Latent authentic political support and high expert-level credibility. Incubating an AI safety popular movement puts all of that at risks. Funders and drivers of this push should reconsider.

The only reason this hasn’t happened this time around was because the PauseAI contribution to the anti-moratorium case paled dramatically in comparison to the Bannon-led push.