A Moving Target

Why we might not be quite ready to comprehensively regulate AI, and why it matters.

British Far-East command had the Japanese threat under lock: Fortresses with heavy artillery were ready to dispel any naval assault from the south. When the Japanese went with bicycles instead of ships and coordinated a major attack from the north, Singapore fell, in what Churchill has described as Britain’s ‘worst disaster’.

Last night, the proposed moratorium on state-level regulation of AI has been struck from the reconciliation bill. The final 99-1 vote has been the result of an exhausting and exhilarating few weeks of relentless debate and unlikely coalitions, ranging all the way from Marjorie Taylor Greene to Elizabeth Warren. In broad terms, a moratorium like this – particularly the originally proposed sweeping versions – would have been inelegant and ineffective policy, and it’s good it did not pass. Tying yourself to the mast is an honourable sentiment in principle, but it seems to fall apart in cases like AI where action might be urgently required at any point. Nevertheless, there was an interesting undercurrent to the conversation around it that got much less attention than it deserved: When is it too early to regulate a fast-moving technology like AI?

Precaution and the pursuit of regulatory leadership, the likes of which some opponents of the moratorium have invoked, are a treacherous basis for technology regulation. The story of recent stabs at AI regulation makes that clear.

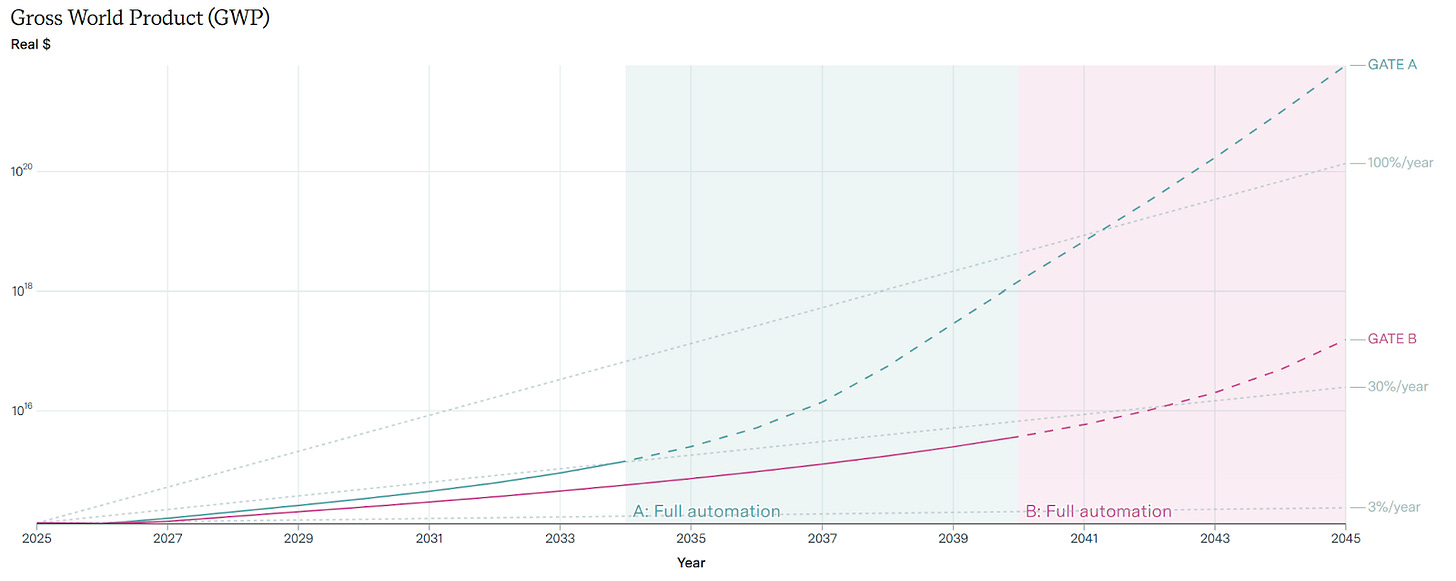

Take, for example, the EU AI Act. First, it tried to regulate primarily on the level of deployed systems, but then the AI paradigm shifted toward powerful general-purpose models. After a last-minute provision on general-purpose AI including compute thresholds and pre-deployment obligations was tacked onto the act, the paradigm shifted again: The move to inference scaling and iterative deployment rendered pre-training compute thresholds and pre-deployment obligations onerous and confusing – making further adjustment through the Code of Practice necessary. Throughout the AI Act’s legislative process, its authors time and time again took aim at the technical paradigm of the day, only to find it had moved on shortly thereafter. SB-1047 tells a similar story: Had last year’s version with its proposed compute thresholds passed, it would struggle to classify the current technical frontier.

This is a deep problem. If tomorrow, the US government came to me and asked me what AI policy should ideally be implemented – not what would be politically feasible, not which low-hanging fruits to grab, but what to do beyond that – I would not be able to give a very strong response. I don’t know which technical paradigm current trajectories will end up in, and that makes me very uncertain about the right scope and subject of regulation: Do we regulate models, systems or labs; must we stipulate ex-ante rules or can we work with ex-post incentives?

My sense is that many thoughtful people in AI policy feel the same way. By and large, we can identify some directionally good first steps, but struggle to articulate a cohesive vision beyond that. This uncertainty has very real effects - for instance, it has made things harder for opponents of the moratorium: It would have been much easier to make the case for the moratorium if you could clearly say what broad legislative platform you were hoping to pass. It’s easy to dismiss this ex post, but this fight was arguably a lot closer than the final tally has implied – it still is worth it to learn from it.

Addendum: I have received some pushback on this, but I’m not quite convinced yet. Of course this mechanism is not correct in all cases — there are many ‘minimal’ supporters who will be most convinced by the least comprehensive regulatory agenda. But if you're looking for proactive champions, people to do some groundwork on bills, to raise more awareness as a function of their interest in owning the issue, it feels like you need to hand them a solution they can run that policy campaign with. I think it's much less interesting to be the political voice of 'AI is really big' if you're not also the one who has the solution. Sure, it's still a somewhat attractive position, but I feel it would be much easier to get people there if you could give them clearer ideas.

Both on policy and political grounds, we have to grapple with the bigger questions at some point. Paralysed inaction in the face of dynamic technological development can’t be the answer. So when do we know enough to hazard a shot at the moving target?

Unreliable Snapshots

Currently, the technical trajectory seems to be pointing toward AI agents with increasingly long horizons, trained by applying a lot of reinforcement learning to transformer-based models and then spending substantial inference compute to have them autonomously do things for a long time. Sector by sector, these agents might be able to carry out sophisticated task sequences - starting with easily trainable domains rich in data and verifiability like software engineering, and moving toward fuzzier domains. Such agents would come with a range of idiosyncratic policy implications: They might be job displacers, but displacement would likely happen sector by sector rather than homogeneously - which might inform our thoughts on retraining and adoption barriers. Their success might be highly reliant on data concerning how humans do their jobs - which might inform our thoughts on enabling the equitable sale and procurement of such data. They might be very hungry for inference compute, and less so on pretraining - which might inform our thoughts on how to define a capable, regulation-worthy system. These are extremely important questions, and I think we would struggle to make meaningful AI policy without answering them.

The list goes on, but it really is worth noting how technically contingent all the answers are. They do not transfer to all other AI systems, to be sure; but they don’t even transfer to other systems based on transformers (like a more pretraining-forward environment), to other systems that feature reinforcement learning (such as models that would principally employ some kind of self-play), to systems that get to greater agency through repeated queries drawing on greater context, etc. Crucial, decisive elements of any major AI legislation are entirely contingent on this snapshot assessment of future technological trends. But these snapshots are not particularly reliable. We could attempt to make them more reliable based on some preceding or lagging indicators, but all fall short.

Preceding Indicators

On the preceding side, the allocation of compute toward models and systems would in theory seem like the most observable proxy: Look at where the compute goes, and you find out what tomorrow’s paradigm will be. But compute is quite general-purpose: Because top-tier chips are excellent across most applications, there’s no real telling for what a cluster of them will be used tomorrow. You could think that ownership of these clusters is an indicator – and yes, the hyperscaling nature of compute build-out suggests that we are probably not heading for a highly decentralised paradigm. But because compute can be readily partitioned off and rented out for different applications at different scale, even cluster size and shape only tells you so much. You would certainly not have been able to foresee the shift to reasoning models and inference scaling through publicly traceable compute allocation. Research breakthroughs are similarly fuzzy. Sometimes, new paradigms are a long time coming, like transformers have been; sometimes, we only learn about new research when a developer has already made it into a product-ready system. Even the most committed arxiv delvers might not have more than a rough hunch.

Lagging Indicators

Lagging indicators are easier: We can simply look at actual economic and societal effects of AI models - their effects on jobs or on growth, whether they cause any harms or not. This works if we’re inching towards gradually more meaningful impacts and have the time to adapt to them. But that might very well not be the case. New paradigms can be forceful and effective enough to radically change capability levels overnight, making it untenable to wait for them to have wide-spread real world effect. Consider the sudden jump in practical capability from making transformer models instructable, or from letting them think for longer; and now imagine the same relative jump would occur through another paradigm shift from an already-useful, broadly diffused technology. We should certainly like to have some live oversight before the quarter’s GDP numbers are out. But even beyond paradigm shifts, the trajectory of AI’s real-world effects can be very discontinuous; for many months, we might see systems just shy of transformative ability, and a fairly minor capability gain could push them over the threshold where they might sufficiently uplift human ability for awesome and fearsome innovation, or where they might suddenly be able to make a scalable contribution to further AI improvements. But these periods – of chaotic uplift, of unsupervised improvement, of uncoordinated deployment – are quite exactly the most volatile episodes, and so those that we should want our policies to tackle.

As it stands, we might only know that a paradigm is sure to be worth our policy attention once it’s already too late.

Policy Design under Uncertainty

So we cannot confirm the lasting accuracy of our technical snapshots in time to design accurate and comprehensive regulation. It’s easy to shrug at that and simply give up on the project of ambitious AI policy solutions. I think that’s mistaken, and government should not retreat from this important question on grounds of uncertainty. But that’s easy to say and much harder to practically navigate. Let’s consider two options in policy design: policy that works independent of the specific paradigm, and policy that adaptively responds to the specific paradigm.

Paradigm-independent Policy

First, we could make paradigm-independent policy. The most obvious part of this is policies broadly related to transparency: whistleblowers, reporting requirements, safety and security protocols, third-party evals, etc. I think all these are fine and good and, if executed well, are a clear improvement on the current state of policy. They also help indirectly, by clarifying to policymakers and the public what paradigm we might be in. That alone is a good reason to oppose a moratorium, but it doesn’t make for satisfying policy on its own just yet. Clearly, the stakes of ‘getting AI right’ go well beyond transparency obligations. But paradigm-independent policy is not scalable to solve the big issues – there is no policy that can skirt the technical details and still remain sensitive to the idiosyncratic requirements of whatever advanced AI turns out to look like. Perhaps the closest are broad legal settings, like meaningfully expanding liability, or requiring AI to follow the law. But recent discussions around liability illustrate pretty well how contingent even such an ostensibly general provision is – it turns out that liability has a much easier way to make sense of ‘normal technology’ than of genuinely agentic systems who skirt easy assignments of respective responsibility.

This also means that paradigm-independent policy alone often makes for bad politics. I know that safety advocates in particular are sometimes enamoured by small asks. ‘If we can’t even get through the pareto improvements, then what hopes do we have to actually succeed’, goes the story. I think that’s mistaken. There is serious political downside to positing big problems yet providing small solutions. It’s a very bad political dynamic to face off against a deregulatory agenda by saying ‘AI is the biggest and riskiest thing to ever happen, and hence we need these minimal provisions!’. That disconnect makes it unattractive to get on board with your framing. If a policymaker wants to own an issue and is supposed to buy into a broad problem analysis, they usually prefer having a solution ready to go. Telling people to be very worried about something but offering no solution is not very good politics. And so you make it much harder for policymakers to join your coalition if you don’t give them some ideas for what they could advocate in favour of. Minimal provisions clearly disconnected from the scale of the problem don’t cut it. ‘We should at least…’ is, paradoxically, often not a very powerful slogan.

Conditional Policy

Second, we might favour conditional policy, i.e. if-then-regulation. That would entail passing conditional stipulations: We would pass a bill today, but its contents would not apply until some technical requirement outlined within it would be fulfilled. For instance, you could imagine benchmark targets or economic metrics to determine when a rule would begin to apply. I think this is the kind of idea that looks elegant on a whiteboard, but does extremely poorly in practice, for two reasons: First, we are nowhere close to the required sophistication of measurement. Any condition that we might propose should be met for our regulatory proposal will be one of two things: Very vague, and thus laden with untenable regulatory uncertainty – such as the determination of an expert panel or similar; or so specific as to be potentially manipulated, like many realistic sets of evals. Such evals might allow developers to target them, either by very actively training toward them, or much worse, by sandbagging them to avoid regulatory scope despite a high capability level. My sense is that today’s leading evals are not ready to avoid these failure modes. This is highly regrettable, and a good reason to improve on the tricky political economy of evals.

If-then also faces an obvious political problem: As a policymaker, you don’t get much credit for passing it. The political calculus for a lawmaker to push through AI regulation is clear: They want to invoke and appeal to a concern in the electorate, and demonstrate they have alleviated it. Ideally, that works through direct impacts; alternatively, a well-understood path to impact works, too. But an if-then-commitment is neither of those. You pass a law now, and by its very design, it doesn’t do anything for quite some time. It might pay off at some point - or it might not. That makes it great for low-salience policymaking, where you primarily care for the outcomes of your proposals. But in a highly politicised environment with an increasing level of public interest in AI specifically, if-then-commitments fail to satisfy the political requirements.

![シンガポール要塞鳥瞰圖 / [Birds Eye View of Singapore Fortifications]. - Main View シンガポール要塞鳥瞰圖 / [Birds Eye View of Singapore Fortifications]. - Main View](https://substackcdn.com/image/fetch/$s_!VGtK!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F59fb72ae-67de-4ad3-8d2e-350370462a5a_1000x726.jpeg)

Back to the Drawing Board

My takeaway from this assessment is this: I don’t feel we know enough about advanced AI to pass comprehensive regulation today. Regular readers will recognise that we have once again reached the unsatisfying part of the post where I suggest that others have to do something very difficult. In this case, I feel we either need reliable criteria to identify a lasting technological paradigm; or clever policy design that allows for conditional stipulations without becoming politically unattractive. Without progress on either, we are in no position to take a swing at getting broad-strokes AI policy right.

This is no endorsement of a moratorium – both because things could rapidly change and require us to act fast, and because there are plenty of low-hanging policy fruit left to be picked, for instance around transparency, state capacity, geopolitics, and societal resilience. But for the big-ticket questions of how to design governance so that AI goes well for all, I am not so sure.

I know that some readers will dismiss this argument because there is so little political support for AI regulation that none of this seems to matter very much. But I think such things change quickly: between the coattails of the moratorium fight that has polarised some policymakers into pro-regulatory stances and the political appeal of issue ownership on AI job disruption, some broader proposals could emerge soon. Besides, political windows are no law of nature. Windows do sometimes adjust to fit feasible and attractive policy solutions – if you are worried that good comprehensive proposals are politically infeasible, the best way to keep it that way is to never come up with any good proposals.

As political motivation to ‘do something’ about AI is picking up and uncertainty still reigns supreme, I would put it to frontier AI policy advocates to return to the drawing board on a slightly higher level of abstraction. The current platform is a patchwork response, but it does not live up to the central part of the challenge: regulating a technology that moves faster than policy. I know right now feels like crunchtime – but regrettably, the ideas to start sprinting ahead on the politics alone are just not quite there yet. Getting this wrong could calcify misguided barriers and spend valuable political capital. Do we really know enough about the shape of the threat to raise the fortifications just yet? I do not think so. We better find out soon.