AI & Jobs: Two Phases Of Automation

AI labor impacts will look normal before they look existential. This creates a timing problem for policy development.

Four weeks ago, I argued that we’re unable to propose useful policy on AI-driven job disruption. Today, I take a short follow-up look at one specific misunderstanding that has contributed to the lack of effective policy development. The misunderstanding arises from two ostensibly rivaling views of AI automation that feature prominently in the political discussion.

The first story is one of AI as a normal automation technology: on that view, AI job disruption is comparable to automation waves in the past, like the industrialisation or agricultural mechanisation. The second view is one of AI as a great displacer, a technology that renders large swathes of human labour obsolete. Frequently, solutions that work in the former paradigm are derided as insufficient in the latter. Inversely, solutions that work in the latter paradigm are derided as extreme and trigger-happy in the former. Both reads of future disruptions have merit. But these models are not mutually exclusive – they’ll occur in sequence. That makes for unique policy challenges: We’ll have to deal with the upcoming second phase while in the grips of the first.

The Disagreement

Surveying the debate, it looks like there are two competing perspectives on AI-driven job disruption, with different policy prescriptions.

The first perspective says that AI job market effects will essentially be ‘normal automation’. Like any previous wave of labor displacements, so the view goes, the job market effects are essentially transitory and net-positive: Some jobs get disrupted heavily, but global employment is stabilised by the general increase of economic activity, new possibilities through technological progress, a mix of Jevons’ paradox and cost disease effects. On that view, we can learn quite a lot from the past: from industrialisation, agricultural mechanisation, and the introduction of the internet. Policy solutions ultimately have to deal with the frictions of addressing an intermediary problem: provide an off-ramp to workers in exposed sectors, strengthen sectors that can provide absorption to displaced workers, etc. More fundamentally, this view provides an optimistic perspective: In the end, AI-driven productivity gains and deflationary effects will help everyone, because there will still be enough jobs to go around to ensure everyone can partake in AI-powered abundance.

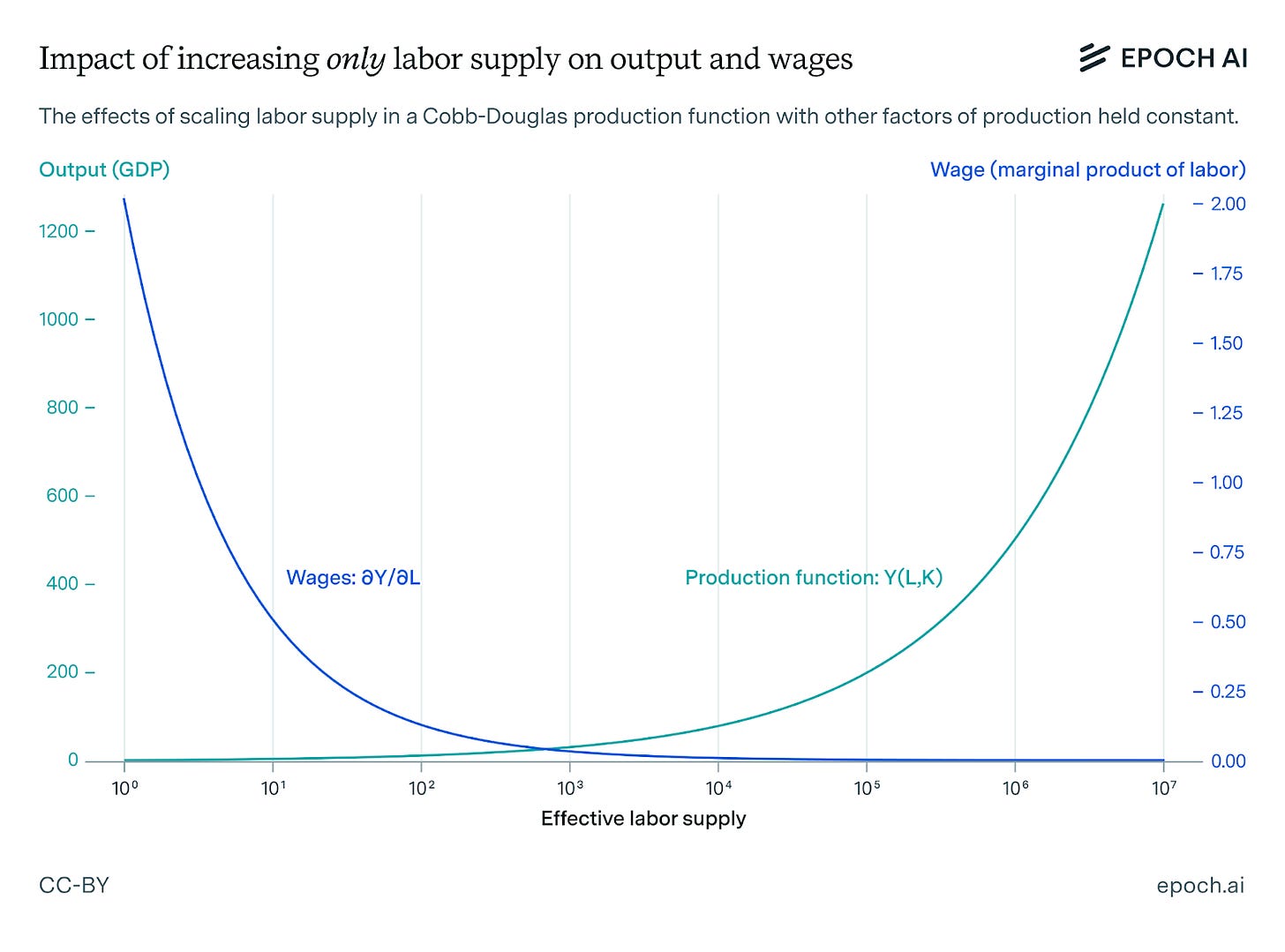

The second perspective, instead, expects fundamental displacements. This view holds that AI disruption is fundamentally different because AI agents compete with humans on a more general level. That means that for most or all human jobs, there will at some point be an AI agent that carries it out at a higher cost efficiency. And because agents can be duplicated at will, there is no comparative advantage from human tasks; absolute cost efficiency is all that matters and favours AI. As a result, many humans might no longer have an economic role to play – and hence both lose out on a revenue stream to partake in consuming AI abundance, and on the political capital implicit in their ability to withdraw their labor.

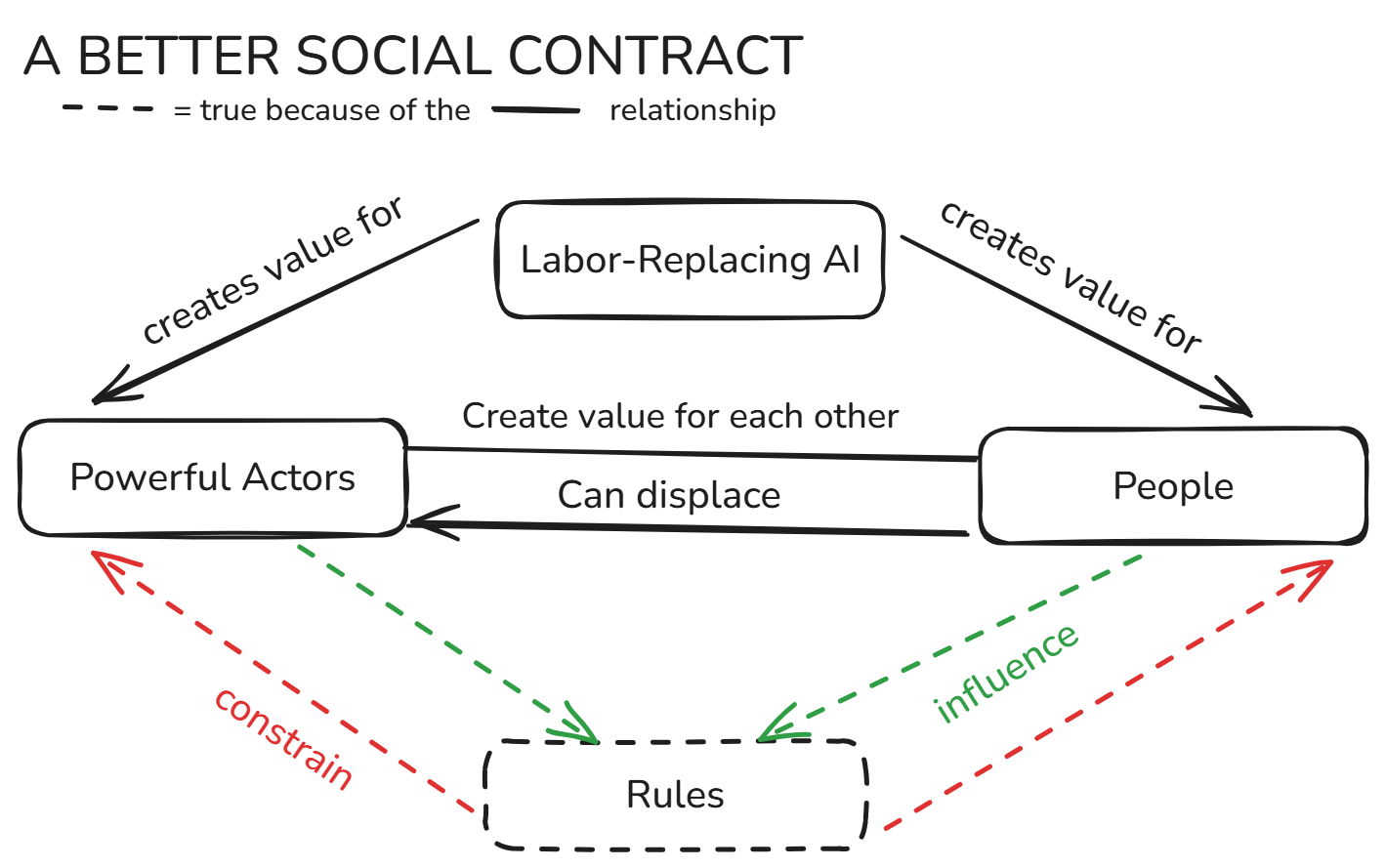

Because previous automation technology has been so limited both in terms of scope and duplicability, this second scenario is unprecedented – and it could end up suppressing human wages below subsistence level and their political capital below a level necessary to negotiate different means of income. This view fundamentally requires deeper and more systematic action. Advocates have described the necessary policy as changes to the ‘social contract’, by which they mean moving away from a fundamentally labor-centric notion of how people acquire goods, services and political say. Unmitigated, this latter view is pessimistic: By default, we get AI abundance, but most don’t get to partake.

Two Phases

Debates on these two perspectives often invoke a sense of mutual exclusivity: either we get normal automation or fundamental disruption. But I think the more likely version is that these views are not exclusive futures, but sequential phases. I think this is a fairly intuitive point that has been made in the scholarly literature before. But it does not prominently feature in the policy discussions — where policymakers often either project optimism in purely net-positive automation, or invoke fears of immediate disruptive job loss — even though it comes with fairly impactful political consequences. Let me lay it out briefly:

The first phase happens as soon as AI reaches the level of many previous automating technologies. AI gains a functional absolute advantage over humans in some narrow domain – making it better to deploy an AI agent for task x than a human. Or, at least, AI gains a comparative advantage – making it efficient to employ an AI agent for task x, because then your humans can do different things instead. On that view, AI agents’ work might often still be bottlenecked by human activity, for instance because these agents need to be managed, or because agents can’t carry out the entire task profile of a given job, hence still requiring humans to do some of it. Human labour moves either to tasks or to domains that AI agents hold no advantage in. I think most observers agree we are headed for this first phase fairly soon, perhaps within the next year or two: long-horizon agents in narrow domains requiring mostly standardised computer use, like software engineering, seem directionally tractable and are the central research priority of leading AI developers.

Even in that first phase, there are definitely still losers; for instance those whose jobs used to heavily involve carrying out displaced tasks. But by and large, we should expect macroeconomic fundamentals that have held throughout previous automation waves to hold again: Jevons' paradox means that as AI makes certain tasks much cheaper to do, demand for such tasks goes up, so even if individual jobs get displaced, total demand for work in those areas often grows. Baumol's cost disease works in the other direction: productivity gains in AI-dominated areas make human-intensive services relatively more expensive and thus better-compensated. And as we've seen in previous automation waves, new job categories emerge around the technology itself; both enabled by it, like potential one-person-firms, and enabling it, like plenty of work in building out and integrating AI.

The second phase happens as soon as AI agents cross two thresholds. First, they become autonomous enough so that there are no domain-specific tasks for humans left to do in areas where agents are effective. For example, you might think of this moment as the transition from hyper-efficient software engineers that basically become their agent swarms’ product managers toward wholesale AI agent firms that deliver software products. And second, they become general enough that there are not enough domains to displace workers to: The agent firms are no longer just the most effective at software engineering, but the most effective at many things. At this point, continued human employment no longer relies on absolute advantage, but largely on comparative advantage. That’s a perilous position: Compute efficiency cost curves imply agents will get cheaper, while the cost of human wages can only plummet so far. As a result, the attractiveness of hiring comparatively advantageous humans will fall and fall over time.

When will that happen? There is much to be said about how difficult it might be to cross these thresholds. I suspect that real-world frictions mean that it’ll take longer than some of the most aggressive timelines allege, but I see no reason why the challenge would not be tractable in principle. And so, given all the investment flowing into solving it, I expect it to be solved at some point.

But there is good reason to believe the two phases are distinct; I don’t expect there to be a sufficiently fast explosion of capabilities that they blur. Generalising to a bunch of fuzzy, very difficult-to-train domains seems, well, very difficult. At the very least, barring a major breakthrough, it seems to require building a lot of expensive and expansive environments for reinforcement learning – and the jury seems out on whether that is even cost effective for the ‘last mile’ of automating some task profiles. I also expect political backlash to do its part in slowing down the diffusion of agent firms and in keeping around increasingly sinecure jobs. All that makes me somewhat confident that the second phase will come, but will not come immediately after the first phase. My suspicion is that between homeostatic effects and difficulties interfacing with the physical world, we’re looking at a delay of perhaps five years between the first and second phase – but I’ll refer you to the excellent body of predictive work on this issue instead. For the purpose of this piece, you only need to believe that the phases will be somewhat distinct.

Policy Implications

This has implications for policymaking on labor disruptions – both on near-term fixes and long-term systematic issues.

Near-Term Policy

It first means that even if you’re very confident that AI is the big displacer, you should still endorse ‘prosaic’ policy measures aimed at normal AI disruption. First, because they’ll work to reduce quite a lot of unnecessary suffering, friction and backlash that would result from not getting the first phase right. If AI disruption is sequential, the mere fact that you eventually expect big disruptions no longer implies that effective absorption-focused policy, shallow redistribution, wage insurances, and sound general economic policy to capitalise on AI-driven growth are futile.

On the sequential view, prosaic measures matter for another reason: because they shape the political environment in which we’ll eventually make the policy for the second phase. The most likely policy windows for deeper interventions – changes to the ‘social contract’ – occur further down the road, when the technical makeup and economic impact of AI systems becomes clearer. Without policy to address normal disruption, these windows will occur in a highly turbulent political time. As I’ve written in greater detail before, even fairly shallow disruption creates fertile breeding ground for populist hijacking of policy conversations and tends to let wildly unproductive ideas run amok. There’s also a related coalitionary effect: The debates in the first phase will shape allegiances, party positioning and constituencies. For instance, if this debate is polarised along party lines, that will carry over to future political debates; and if it’s dominated by some politicians, these will remain key.

All in all, it’s in the best interest of those concerned about the future of the social contract to calm the waves through prosaic policy; and engaging on the intermediary debate is valuable terrain-shaping even if you’re happy letting creative destruction run its course.

Social Contract Policy

The sequential view has another implication for long-term policymaking around the social contract: Even if we’re ultimately due for unprecedented displacements, it won’t look like it for quite a while. Instead, the early future will robustly look like normal automation first. Advocates for more fundamental, ‘social contract’ style work face a tricky political economy at that point: As AI leads to new jobs, stable employment and growing economic activity, it’ll look like their detractors have been right. At the very time at which their suggested measures will become most necessary, it’ll look like they’ve mostly been wrong. There is a policy design and a politics level to that problem:

The policy design level is: how do we know? If we expect the early trajectory to be the same, how do we identify whether we’ve been right or wrong about a second phase coming up? And, perhaps more importantly, how do we put this criterion into benchmarks and conditions that are legible from the outside, to skeptical stakeholders? I think the obvious low-hanging fruit here is making automation data collection a policy priority. Ideally, this happens as a combination of enabling institutions like BLS to collect more fine-grained data and compelling labs to greater transparency around how their models are used to automate labour. The latter part is especially lacking to a dangerous extent. Data like Anthropic’s on economic impacts is interesting, but is so incomplete that it lacks distorting findings altogether – for example, it lacks API and enterprise data, so skews toward chatbot applications, so skews away from actual automation toward individual augmentation. More sophisticated data needs to be available so that researchers and policymakers can get a grasp of trends before they manifest as disruptions, especially for assessing when the first phase of creative destruction might transition into the second phase of societal disruption.

The politics level is closely related: We should be careful not to oversell automation scenarios, and especially not to oversell rebuttals to the ‘normal automation’ view. If the social contract platform is widely understood to assume that there is no ‘normal automation’, the likely sequence of automation phases will lead to a deep credibility problem. Currently, we are in a phase of bidding on credibility: Experts offer their differing predictions to policymakers and formulate policy asks based on these predictions. Based on what happens in the real world, policymakers will be inclined to favour the ideas of the experts that have ‘gotten it right’. But given a sequential trajectory, it’s very difficult for someone worried about fundamental disruptions to make verifiable predictions today: no matter if they’re right or not, it’ll first look like they’ve been wrong.

This is something I am already worried about with regard to the exciting recent discussion around job disruptions and the ‘Intelligence Curse’. I think we run a risk that policymakers will see the first phase of ‘normal’ automation occur, see successful absorption and stable employment, and take away the wrong conclusions – namely, that the more fundamental concerns had all been wrong. Avoiding that requires communicating the sequential view upfront. This is not unlike early cases of AI safety advocacy that oversold mid-term risks of misuse and suffered credibility losses afterward. Those worried about fundamental labor disruptions should avoid this same mistake.

Outlook

There are good reasons to believe AI job disruption comes in two phases: first as normal automation, then as a more fundamental shock. Attempts to address either need to grapple with both. Policymaking for normal automation must recognise its shortcomings regarding the unique prospects of AI displacement. And policy development aimed at shocks to the social contract need to acknowledge the politics of normal automation on the way.