Datacenter Delusions

Simply building compute capacity does not make for a convincing national AI strategy.

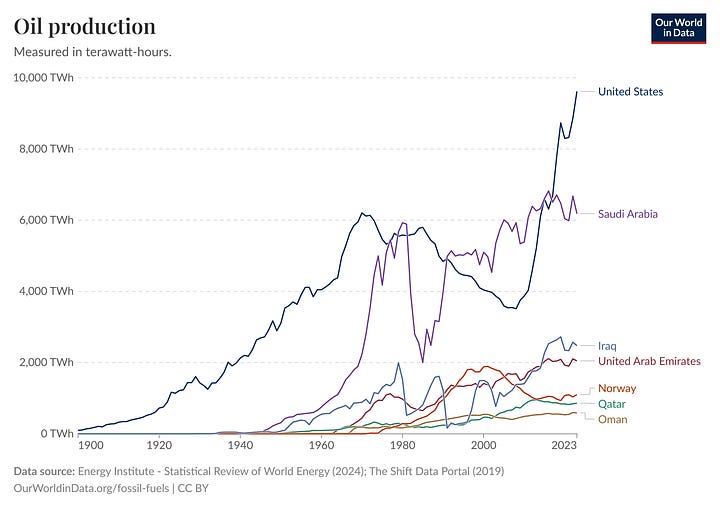

AI policy analysts often present compute as a resource somewhere between oil from the 20th century and Spice from the science-fiction universe of Dune – a near-mystical resource that bestows wealth and political power onto its holders. That became obvious again in May this year, when the US government announced a major deal with the UAE on the export of AI chips. G42, an Emirati AI holding company, would purchase and run a large amount of frontier Nvidia chips. US observers have done well to analyse the deal from the US perspective, but I have seen less discussion on a central variable to evaluate the Emirati side: Can the UAE - can anyone - become an ‘AI oil state’?

Questions on the strategic upshot of compute buildouts bear repeating in many AI middle powers. In fact, it’s usually much worse than in the UAE case: Whether it’s EU gigafactories, South Korean megaclusters, or UK attempts to scale up datacenter capacity, nations are pursuing domestic compute supply. As opposed to the UAE, I’m increasingly unsure what most other countries actually want to do with their compute. This piece looks at viable strategic thresholds for compute buildout – and finds that many enthusiastic compute buyers are not on track to deploy and leverage the resources they’re attempting to amass. Merely controlling some compute does not yet make for controlling the universe.

Four Domestic Thresholds

There are four obvious target thresholds for domestic compute buildout: Reach the capability to run sovereign specialised or general inference and to run narrow or frontier training. All of them have some strategic merit, but they also pose drastically different requirements on the modalities of the build-out project. None of them are best served by simply buying and deploying a lot of compute. Beyond these thresholds lies the prospect of becoming an intelligence exporter, i.e. an ‘AI oil state’.

Specialised Inference

The first threshold is ‘enough compute to run specialised local inference’. There are some specific AI deployments you might think are particularly important to run at home. There might be three reasons for that: First, deployments might be too sensitive to off-shore, such as when they process confidential, private, or security-relevant information. Second, they might be too important to off-shore, if any momentary disruptions of service would be perilous, such as when systems might be deployed to manage vital systems, from power grids to financial markets. Third, deployments might require a very low latency, making the distance to offshore datacenters a dealbreaker. This might be the case where AI needs to react fast, either where it’s tied into fast-moving physical processes such as in manufacturing, or where rapid data processing is required, such as - again - in financial applications.

A strategy that would aim to cross this threshold would ideally want to formulate some demand projections for specialised inference needs depending on idiosyncratic national requirements – for instance, if you expect your economy to require a lot of low-latency inference, it makes sense that this capacity would be higher than others. It would also put a particular focus on secure datacenters: If most of your buildout aims at sensitive data or security applications, you obviously want the resulting structure to be resilient to espionage and sabotage.

Inference Sovereignty

The second major threshold is ‘enough compute to run all domestic inference’. This tracks a general global demand for sovereign supply of vital economic goods: you don’t want to be reliant on intelligence imports just as much as you don’t want to be too reliant on energy imports. In many ways, inference asks the sovereignty question in a more pressing manner because you can’t stockpile it. You can build a strategic petroleum reserve, you can fill a gas reservoir for the winter, but you can’t stockpile AI tokens. So any disruption of intelligence supply is existential immediately, with little room for a policy response. From that point of view, it seems reasonable to want to locally service inference needs.

On the other hand, of course, there’s an associated cost issue. Building datacenters in many advanced economies comes with greater overhead and higher energy costs than centralised buildouts in developer countries or the Gulf. So after you’ve built your inference compute, you also need to create demand for it – perhaps through subsidising the domestic inference price, perhaps through placing regulatory requirements on domestic customers. Either way, building out a full inference stack is a lasting commitment in need of expensive upkeep, and by no means a necessary imperative. It’ll also almost necessarily be much more costly and economically restrictive than simply importing the intelligence from elsewhere. All in all, a bit like reaching full energy sovereignty today.

To add, the amount of compute you may need to service inference needs is highly variable. It depends greatly on inference efficiency, and on the inference/training split required for tomorrow’s frontier systems. The extent of security requirements on these inference datacenters also seems highly variable: If you expect most of your inference needs to be serviced through running closed frontier models, you want secure datacenters so that these models will be exported to you without fear of theft. But if you expect to mostly run open-source or sub-frontier models, you might be able to forego espionage-related security measures. Reaching full inference sovereignty, then, appears to be a moving target with potentially very high costs. I don’t see governments tracking it at present.

That realisation should cast some doubt on assuming inference sovereignty as the default target of compute build-outs. I think it’s very likely that inference sovereignty will not be a viable goal for many countries, and might not be so attractive for a lot of them either. Being ‘cut off’ is a substantial sovereignty risk, but it never quite goes away: Between general interlinkage in the global economy and the fact that even an inference-sovereign country still needs to regularly import new chips and new models, we are looking at a marginal sovereignty gain as opposed to a paradigmatic difference – making inference sovereignty at least a contentious strategic objective.

Narrow Training

The third major threshold is ‘enough to train your own specialised models’. You might think there are some specialised AI models you would want to have full control over – over the data they’re trained on, over who knows how they work or decide. Again, this is most relevant where you want your AI’s decision to be intransparent and unpredictable from the outside, such as in military or broader security applications, or in cases where you want to extensively train on proprietary and confidential data, such as from consumers or from manufacturing processes. Relatedly, beyond material reasons for domestic training, regulatory frameworks like the EU’s GDPR might require you to carry out such training domestically anyways. In all of these cases, a country might be interested to create training capacity even if it plans to import a large share of its models anyways.

This threshold might be higher or lower than that for running all inference – much of the comparative compute costs depends on inference cost curves, efficiency gains, and the most advisable model suite for widespread economic deployments. Specialised training might also require different chips - for instance, you might think that a specialised training run is best served by a small amount of cutting edge chips, while general inference needs can be supplied by last-gen chips like the A100 or even specialised inference chips like the H20.

Frontier Training

The fourth major threshold is ‘enough compute to train frontier models’. Much has been said about this prospect elsewhere. In short: training frontier models is really very difficult. You need huge amounts of compute and a corresponding supply of top-tier talent to even stand a chance, and even then you run the risk of falling short. Other than perhaps xAI, each of the many ambitious attempts to catch up to the model frontier have failed, notwithstanding high amounts of capital expenditure. If this is your strategy, then I understand why you’d simply buy all the compute you can possibly get – if you aim at frontier model development, that seems like a reasonably robust move. But if this is your strategy, you also need a good answer to all the other challenges, beginning with talent supply and an answer to the question of why everyone else has failed. Middle powers engaged in compute buildout do not seem intent on answering these questions – and so I have trouble believing that frontier training is the threshold they’re trying to cross.

Beyond The Threshold: AI Oil Powers

The above thresholds have all been domestic. Beyond them, countries might also aim to create an oversupply – to become an exporter of intelligence. Compare this, in the most general terms, to being a major gas or oil exporter: Through extensive compute buildouts, you reach a position to sell GPU access.

What kind of leverage might being an AI oil power give you? I can think of four upsides:

First, you get to make large, profitable contracts of renting out compute capacity. If you have a reputation as a reliable compute provider, low energy prices, and efficiency gains from large-scale buildouts. Buying GPUs and renting them out at favourable prices might simply be a decent investment – especially if you think you’re still early to the AI issue.

Second, if you hold a significant market share, you can squeeze global compute supply and prices. Of course, there are hard limits to the supply manipulation you can do, lest you lose customers and allies who will export compute to you. But the history of oil shows there are options – ‘energy shortages’, double bookings, necessary repairs and much more can give you wiggle room to restrict supply and exert leverage over global prizes.

Third, in an increasingly bipolar AI paradigm, you might be able to turn your coat as a nuclear measure. Presumably, most AI oil powers would be dependent on compute imports from a great power. Today that’s mostly from the US, in the future it might also be from China. In a mostly economic paradigm, reneging on your deals and embezzling your imported GPUs is of course strategic suicide. But in an actual escalation of the US-China conflict, that option still remains – and if you think the US-China conflict will come down to AI capabilities, a net difference of two million H100-equivalents at a critical time might just be substantial leverage.

Fourth, you can throw a rope to powerful, but AI-starved, players. Major economies and military powers that are not the US and China might soon realise they’re hopelessly behind on AI and scramble to catch up. But their often-fraught energy situations and the lack of readily available compute to buy means that even if a country like Germany decided to ‘wake up’ in 2026, they wouldn’t just be able to build huge datacenters the day after. That’s where AI oil powers come in: They can supply the necessary compute to the panicking middle powers – at a high cost. Compare this, if you will, to the diplomatic inroads that Qatar made with the German government when the latter scrambled to restore its gas supply in the wake of Russia’s attack on Ukraine.

What do you need to become an AI oil power like that? First, obviously, you need a lot of compute. As a rough orientation, a major oil power in the 2010s would control around 5% of global oil production. There are some reasons to think you’d need a larger AI share for similar leverage, e.g. the lack of obvious coordination venues like OPEC, and some reasons to think you’d need less, e.g. the lack of stockpiling and thus more immediate economic effects of supply changes. Almost no middle power is on track for that position.

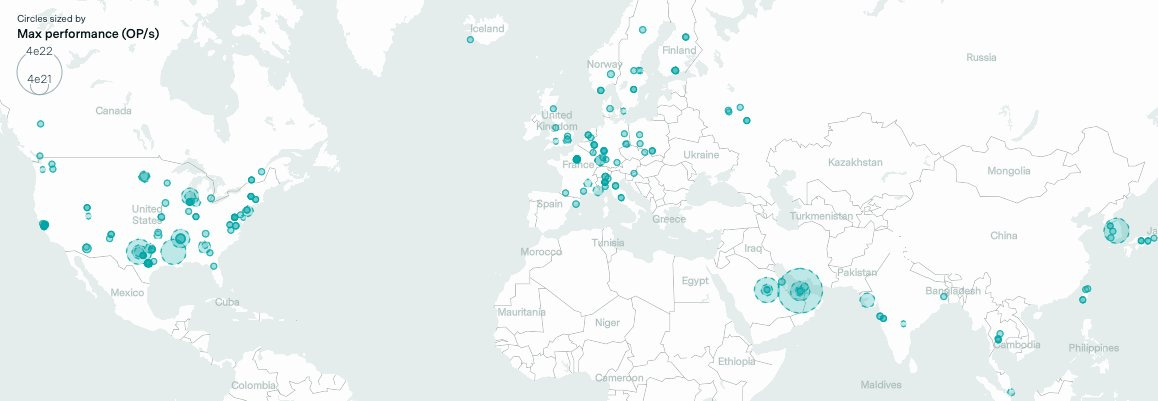

The aforementioned UAE deal might be a notable exception: If everything goes through and the UAE has the resources and political will to get the chips online fast, it seems plausible that their domestic computing power would add up to ~2m H100 equivalents through 2026, and up to 20m H100e in the 2030s. Depending on timings, Nvidia shipment pace, TSMC disruptions, etc, that amount could make up perhaps 5-10% of global compute supply, courtesy of related predictions by AI-2027 and Epoch. Note that this is the absolute best case attempt to become an AI oil power, carried out by a state with near-unlimited capacity to accelerate build-outs, spend money, and provide energy supply – and it looks like it might barely make the UAE the equivalent of a major OPEC member. To compare: The entire EU’s gigafactories project combined with France’s more ambitious buildout attempts seem set to make up perhaps 2% of global compute supply once they come online in early 2027.

But compute supply alone is not enough. These chips don’t last forever: you will have to upgrade to next generations, and even your remaining chips break and deteriorate over the years. Does that mean there is no effective lasting advantage to buying compute? Not quite. First, a few years are a long time, especially given that there are no intelligence stockpiles. Having a lot of compute now might be used to leverage better compute deals in the near-future, and guarantee continued build-out. But mainly, I think the central feature of an AI oil power is the ability to deploy compute cheaply and at scale.

Many countries, including the US, may struggle to continue the pace of datacenter buildout. Between regulatory constraints, electricity grid shortcomings, high energy prices, and possible political backlash against AI, it’s conceivable that Western democracies have enough GPUs, but lack the datacenter infrastructure to run them. An ideal AI oil power faces no such issue – gulf autocracies can wave away political backlash and regulatory oversight, and usually do not lack energy supply. The long-term merit of being an AI oil power might not be ‘someone who has a lot of compute’, but ‘someone whom you can send an H100 and they can actually get it up and running’. Only very few countries are positioned to take that role. I think the UAE will. Saudi Arabia might. Quite certainly, those with high energy prices and regulatory barriers, like the EU, UK, Japan and South Korea, are not.

No Man’s Land

Aiming at any of these thresholds might be a promising play for some countries. Aiming at none of these thresholds, however, seems ineffective. You might think that buying GPUs and plugging them in is a robust strategy that transposes into any of the above strategies. This is not the case. Between the kinds of chips and the kinds of datacenters you need, different thresholds require different strategic perspectives from the get-go. Any uncoordinated compute strategy that misses the point risks getting lost in the middle. And getting lost in the middle is bad – you don’t want to float excess inference capacity that doesn’t contribute to any strategic goal.

Simply building datacenters and renting out relatively small batches of compute ad-hoc with no long-term plannability is not a very attractive business model for most countries: On the one hand, the market for ad-hoc inference compute is and will be fairly tight. Prices to rent A100 and H100 capacity have already seen periods of volatile and low prices in recent months and years. More lucrative, stable contracts arise from stable and reliable capacity – exactly the kind of capacity an in-flux, meandering national compute strategy would be unable to provide. On the other hand, running a datacenter in most middle powers is expensive: There are fewer scaling effects than in major countries, less political support to offset regulatory costs and barriers, often high energy prices, etc. – and in effect, your inference prices will have to compete with AI oil states and great powers. Building a datacenter without an underlying strategic perspective is at best a highly costly contingency, at worst a disastrous investment.

Unfortunately, when I look at most AI middle powers with ambitious compute projects, I see them headed straight for No Man’s Land. Most projects on the current books aim at buildouts roughly between 100k and 500k H100-equivalents within the next 18 to 24 months (in line with previous prescriptions of the scrapped diffusion framework), with few special provisions for security, few accompanying plans to leverage that compute for ambitious training, and no salient evaluations of specialised or general inference demands. More ambitious plans, like a potential 3GW datacenter in South Korea, are planned so far in the future that their likelihood is difficult to assess. By and large, these attempts also do not seem to be integrated into any sort of broader AI sovereignty strategy – in fact, as I’ve written in greater detail elsewhere, the purveyors of these compute projects largely seem content to wait what kind of diffusion framework the US government decides to cook up next. At the very least, none of their respective strategies convincingly aim at any of the above thresholds.

As a saving grace, you might think that these countries are simply building out to gain share of a generally important market as a robust slice of the future. But the scale of buildout they’re currently chasing is just not nearly enough to gain a robustly useful market share. On sheer scale, most of these countries are simply hilariously outgunned compared to the US hyperscalers. The general advantages of market share – such as future option value – are not reachable unless you have a good business case to motivate sufficiently large buildouts. If there are no buyers to sustain your first wave of clusters, your compute projects will politically collapse under their own weight. This necessary business case can arise from profitable deployment prospects – which most middle powers don’t have. Or it can arise from a buildout’s correspondence with a major national strategy and thus from enduring political motivation for government spending – which middle powers also seem to lack. Even for the ‘option value’ prospect of compute buildout, a clearer strategic focus is needed.

Outlook

Where does all that leave us? In need of fine-tuning compute strategies, I think. Compute is indubitably very important. Countries need clarity on how they want to service which inference needs, on whether they expect any training needs, and on whether they really have what it takes to be an AI oil state. Only then can they make informed plays for compute build-outs. Clarifying the strategic perspective only after building the datacenters and buying the compute is a highly imperfect solution even today — even if it is perhaps necessary for practical reasons. And as strategic needs for different thresholds diverge further and further, the ‘robust’ strategy is getting less robust by the day. The world is moving fast – ‘just build compute’ has become yesterday’s slogan. Compute strategies are in dire need of greater strategic sophistication.