AI Is Not A Product

Product-focused regulation falls short of AI's promise and ignores its generality and autonomy. We should pivot to input-based policy.

A few years ago, in a simpler time, the challenge of making advanced AI go well was alchemy: instilling values into neural nets and making prophecies around how they might conquer the stars. As technical progress and increased policy relevance have rendered AI as an issue more ‘real’, the conversation has moved on. Today, regulatory discussion is often treating AI systems like a – particularly important – product. That makes sense, both because current AI systems are products, and because an established blueprint politically helps proposals. It also makes sense because in many ways AI is in fact a ‘normal technology’, and we shouldn’t lose sight of the very normal ways in which it affects the world.

But there is a fundamental shortcoming: the product parallel makes us evaluate AI systems based on how little they change the world – scoring downsides against the downsides of already-existing technology. This approach works where new technologies are similar to old technologies: are self-driving cars safer than human-driven cars? Are e-cigarettes healthier than plain cigarettes? By near-universal admission, AI will be different. We want AI to change the world: to deliver groundbreaking research advances, rapid efficiency gains, and make previously scarce intelligence abundant. This change happens along a jagged frontier – not only of capabilities, but of harms and benefits. That means as some things get worse, others get better. Product regulation focused on domain-specific effects leaves little room for the involved system-wide trade-offs. As a result, we lack tools to make trade-offs.

What’s the harm of getting this framing wrong? I see two risks: First, the product regulation advocates succeed, and we wrap AI technology in constricting product guidelines, and it falls short of its positive transformative capability – a technological revolution, supervised by Ernst & Young. Second, the product regulation platform becomes the canon of AI policy advocacy, but fails because it turns out to be untenable. With policy advocacy tied up in a dead end, we lack capital and ideas for sensible regulation, and miss out on regulation needed to address sensitive harms.

We should, as it were, thread the needle between these futures. Better policy discussion requires moving on from the product view of AI that evaluates based on outputs and outcomes. Instead, I believe we should endorse and hasten the trend toward aiming policy at the inputs. There have been recent promising proposals in that vein: policy aimed at the entities building AI models, and at who controls data, compute and models. This piece makes the broad case for pivoting frontier AI policy toward these frameworks.

No Tools For Tradeoffs

The first fundamental issue with a product view is that it leaves little room for unprecedented trade-offs that occur because AI is a cross-cutting technology. Product regulation often deals in terms of assessing downsides and deeming them acceptable or not. Liability, for one instance of a product-focused regulatory paradigm, doesn’t take score: You don’t get an out-of-jail-free card from one tort claim if your systems have helped enough people.

Disruptive AI systems constantly introduce cross-issue trade-offs. The very same advanced AI system that can be deployed as a highly helpful tutor to children has potential applications as an uplift tool for dangerous criminals. The capabilities at the roots of these relevant features are fairly inseparable – the didactic ability and general knowledge of advanced models, for instance. So if you can’t separate the downside from the upside, policy decisions must include trade-offs. Product regulation does not offer a framework for making that trade-off. It leaves it up to rough intuition at best, but I think the likely story is drifting toward political incentives for a patchwork of product restrictions instead.

We risk running into a ’blind men and the elephant’ setting of AI policy: Policy debate around introducing product-style regulation is set to take place between interest camps that only see a very limited part of the puzzle. Once AI disruption reaches a field, that field will see it for its narrow impacts only: the scam watch will want to reduce scam chances, the biosecurity people will flag the pathogen development risks, the unions will see the jobs disruption. All their pitches make great political rhetoric, and appeal to some allied policymakers – who doesn’t want to protect citizens from scams and criminals and high prices and bad weather? As AI grows and grows, this effect does, too: it’ll reach most areas of product regulation, and we’ll see calls to regulate AI according to its deployment in a host of different contexts.

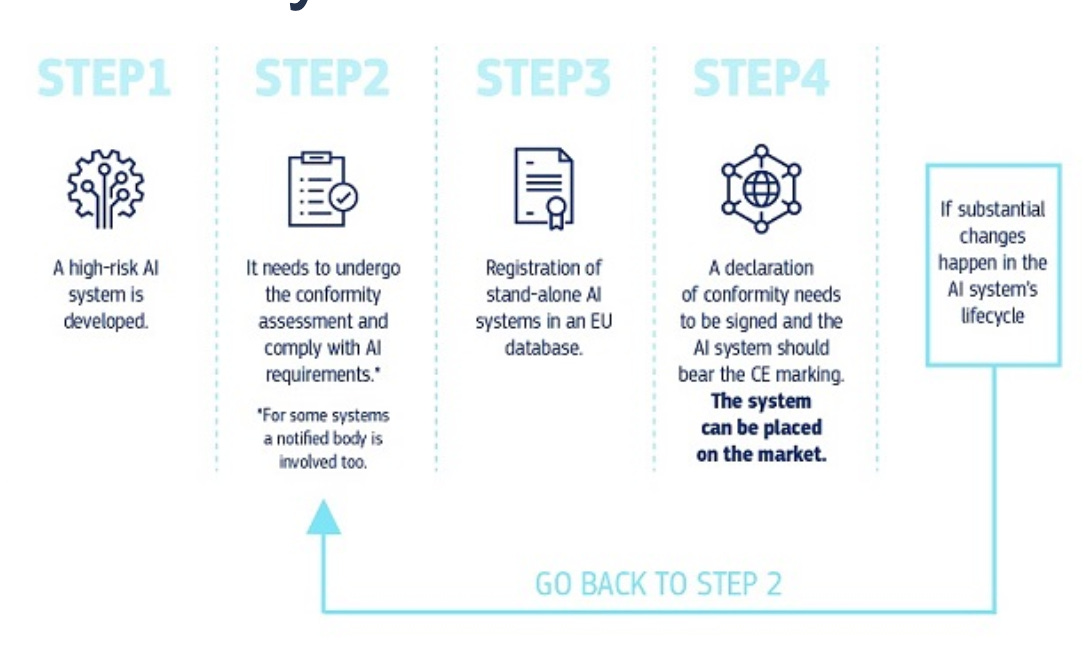

The EU AI Act makes for a good example for the resulting confusion; its most puzzling and burdensome elements are those where AI systems are assessed on confusing sets of criteria, subjecting them simultaneously to general-purpose, risk-specific, and sector-specific regulatory requirements. This gets worse as systems get more general and product regulation requires getting more lobbies and their criteria into the boat. The resulting trend drowns the development of general-purpose systems in redundant bureaucracy, and hamstrings the ability to compromise through enshrining niche lobbies for many different regulatory perspectives.

Political discussion and policy development alike are incentivised to identify narrow vectors of harm and pass regulation to prevent them. The resulting policies inadvertently hit tremendous upsides that are afforded little to no natural lobby in the dynamic of the policy conversation. Once the limits of that approach become clear, it’s prone to political backlash and rollbacks that strip down all effective protections, ultimately failing on the risk-averse merits as well.

Autonomy Breaks Causal Chains

The second challenge to the product view is the autonomy of AI agents, which disrupts product-focused categories of ‘users’ and ‘developers’. Currently, it’s still easiest to conceive of AI systems as products, and thus scoff at more arcane policy proposals like control and alignment. Developers advertise AI systems for a purpose, and individuals use them for a purpose. If the individual misuses a product, resulting harm is their fault; if the individual uses it as advertised and it still causes harm, that’s the developer’s fault. Any inclination of that product to do anything but facilitate its proper instrumental use is at best annoying and at worst censorious. So the regulatory reflex to the current state of AI is to borrow from the ways in which we treat all kinds of other tools, through liability, ex-post enforcement, and generally regulating who’s responsible for preventing what kind of outcome.

But that understanding will quickly come apart as agents become clearly more autonomous. That is because the trend toward autonomy disrupts the direct proxy relationship between individuals and their tools: The more autonomous an agent is, the less we can effectively ascribe responsibility for its actions to someone else. There will be rogue instances of AI agents. There will be shared agents operating on behalf of many distinct users. There will be agents with sufficiently vague instructions to make it clear they’re not a direct proxy for anyone or anything. All that makes treating them like products woefully discontinuous.

This is a point made very well in Dean Ball’s objections to applying strict liability to advanced AI systems: Because you as a developer, deployer or user of an AI system can’t really predict what exactly it will do in great detail, being saddled with liability for its actions can paralyse deployment altogether. Being strictly liable for something – i.e. not even being bailed out by being reasonably careful – only incentivises risk-minimising development if it’s possible to minimise the risks. But the jagged harms and benefits discussed above make that impossible, because liability does not score you on your net effect. The important takeaway from that debate is that AI policy cannot (only) be concerned with outputs, because these outputs are often inscrutable and too far removed from product development and deployment decisions.

The upshot is: As AI systems become more autonomous, we realise that trying to govern them in terms of their ultimate effects on the world often ends up confusing and burdensome. Trying to define all the ways in which a transformative technology is allowed to transform the world and only then deploying it seems like a less and less tenable perspective on policy. We should start with the inputs instead.

Narrow Views Miss Compound Effects

The first two points are about how product-level regulation can be too onerous and too limiting to agent development. But on the other hand, treating AI as a product risks missing broader systemic downsides. Social deployments of AI – therapists, romantic partners, personal advisors, labour disruptions and decision-making advice obviously can’t be assessed on a product-to-product level: a lot of their effects on our society depend on their general proliferation. It’s not releasing an AI companion or agent that changes the world, it’s the quite contingent and complicated diffusion through society that happens afterward.

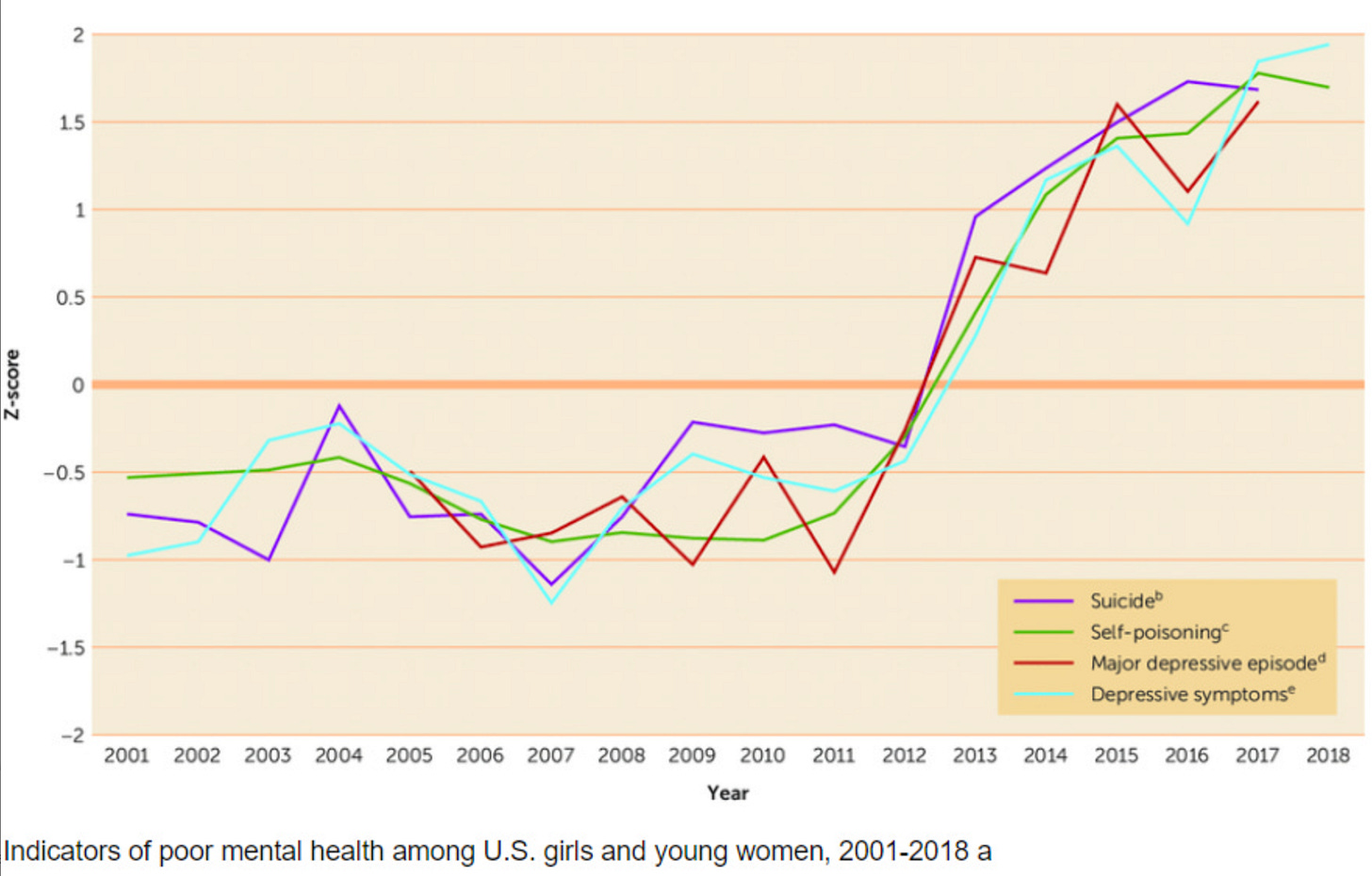

Consider the famous ‘it’s the phones’-debate linking the proliferation of smartphones and social media applications to a downturn in mental health among teenagers — a massive harm downstream of product deployments.

But evaluating single products would have fallen drastically short of appraising these systematic implications. Mental health effects were not the singular consequence of the initial release of the iPhone, but surely followed from marginal increases in smartphone availability; they aren’t directly linked to any one social media service, but are surely linked to their cumulative proliferation to children, the structure of their recommender algorithms, and much more. The mere release of any one of these products, however, surely didn’t meaningfully cause the effect. Product regulation is ill-equipped to answer this version of ‘the Sorites paradox goes to the Hill’.

No single product deployment decision of fairly innocuous hardware and software would have addressed the emerging problem. If ‘it’s the phones’ is true, it’s harmful enough that we ought to call the trend a massive policy failure. And more stringent product regulation would not have done anything about it.

On a related note, I’ve argued before in greater depth that product-focused regulatory approaches struggle to make sense of some of the most severe risks in AI. In short, two of the most concerning risk vectors are hasty deployments where developers feel forced to launch an unsafe product as the consequence of an economic or geopolitical race; and loss of control over internally deployed models. Product regulation has difficulty addressing either: the former because desperate developers can decide to skirt the rules and risk facing the consequences, the latter because product regulation doesn’t hit internal deployment. If not for all of the reasons above, I think this fact alone should make you cautious of spending political capital for preventing grievous harms on product regulation.

AI will be much more surprising and arguably more transformative than the rollout of smartphones and the apps that run on them. If we missed systemic effects then, we shouldn’t possibly hope to catch all of them a priori now. There’s a better way.

Preempting Piecemeal Product Policy

The way we avoid the fate of treating AI as a product comes from preempting the bottom-up patchwork of product-specific AI regulations. I think the most promising way to do so is AI policy that addresses the inputs into AI-driven transformation. There are important inputs along different dimensions of the broader challenge of ‘getting AI right’,

An emerging school of policy approaches shows how to target these inputs:

When it comes to responsible deployment decisions made on a developer-to-developer basis, minimal interventions like transparency stipulations as well as broader entity-based regulation seem promising.

Likewise, private AI governance, if done right, can allow us to keep a qualified eye on AI developers without drafting onerous outcome-focused legislation.

There is a wealth distribution and a geopolitical dimension of getting the proliferation of returns from AI right – predistributive approaches and making sure the AI stack is in stable democratic hands might address them.

There is a question of who gets to leverage, use and misuse advanced AI models, which requires responsible policymaking around AI diffusion – whether that’s primarily compute-based or not.

And there is the question of how to make sure that AI unreliability and rogue behaviour doesn’t make the rest of the debate obsolete, which might require us to seriously revisit the issue of aligning and controlling AI systems.

In return, we might have to get more comfortable with taking the hands off diligent control of specific outputs. Yes, that means accepting much more uncertainty and some preventable near-future harms. There will be some scams, there will be some displacement, and some crimes will become easier to commit. I think that is par for the course for a wave of massive technological disruption – and I haven’t seen an approach to prevent these harms without preventing the disruption altogether, which seems both inadvisable and impossible given current competitive incentives.

You can’t have it both ways: even if product regulation had none of the paralysing effects I describe, we still only have this much political capital for decent rules on AI. I know that in the early days of frontier AI policy, every bit of regulation seemed like a win to many – at least it meant people were taking AI seriously. But that’s no longer where we are. There are real opportunity costs to setting up the wrong kind of framework to think about AI regulation. Going for outcome-based product regulation denies a forum to negotiate the important trade-offs, fails to hit many important risks, and prevents us from getting the inputs right.

Abandoning the product view for the input approach set us up for a much more promising story of distribution and diffusion, and for a more stable overall equilibrium for it – it means no longer missing the elephant for its parts.