A Roadmap For AI Middle Powers

First thoughts on leveraging bottlenecks for abundant intelligence

AI middle powers – that is, most advanced economies that are not the US or China – need to find a strategy for participating in rapid AI progress. Specifically, they need to find a durable connection to AI-driven economic growth and some strategic leverage in negotiations with great AI powers. I argue the most promising path is leveraging bottlenecks: to find a sector that stands between AI progress and real-world effects and make economic and foreign policy around it.

Here’s Where We Are

Recently, I argued that what I call ‘AI middle powers’ – that is, most advanced economies that are not the US or China – are in a tough spot:

Their participation in AI-powered growth is contingent on finding a niche

Their sharing in AI benefits depends on compute & foreign policy

Their safety from AI-powered threats hinges on access to foreign top-tier defensive capabilities.

Currently, most middle powers, from Germany and France via India to Japan and South Korea, lack a perspective to avoid being left behind. What’s more, they also lack motivation to make a plan — ‘situational awareness’, as it were. So, any proposal faces a tricky political economy.

This post operates on what I take to be a conservative consensus position among people working in frontier AI: Over the next decade, AI capabilities will continue to rapidly increase, driven mostly by private companies in the US and China supported by the respective national governments. This will make AI systems meaningful possible drivers of growth and scientific progress, as well as central aggressive and defensive tools in issues of national security. I try to make the following arguments compatible with most specific versions of that overall story.

Here’s What Middle Powers Need

I think a more AI-centric world economy and the resulting novel strategic paradigm asks two questions of a middle power: What is their economic contribution to an AI-dominated economy, and what is their strategic leverage in an AI-driven world order?

They need an economic contribution for two reasons:

First, to create a business incentive to proliferate US- or China-built AI services into their country. If there is no strong, profitable sector that uses AI in your country, it might quickly become unprofitable to frontier AI companies to offer their full suite of products; especially if there are some costs to operating in your country, such as regulatory compliance or localisation. Maybe this incentive can even be your consumer market, if it’s big enough – but that’s much more contingent on (a) a very specific model of AI diffusion with profits driven by ultimately B2C AI products, and (b) on a fairly modest rate of AI-powered growth at home that makes clandestine markets worth the cost of entering them. For one example of how this can go, look at Brussels digital policy, where the size and power of the European economy long compelled tech companies to offer their products even despite considerable costs, but has lately started failing.

Second, more generally, to participate in the overall economic returns from AI. Much valuable contributions have recently tried predicting the overall economic returns of widespread AI adoption — no matter how this might go, it likely would not be a universal trend. As with most major technological revolutions in the past, AI diffusion would likely dramatically increase growth in some parts of the economy – and the world –, and could cause stagnation and even destitution in others. In 1820, you probably don’t want to be a weavers’ town.

These two factors are often conflated, but somewhat independent: If you reap some early days benefits from AI growth by buying and using low-margin intelligence, that can still fail to structurally incentivize future participation. There was a brief period where the weavers from above felt pretty good about industrial production of their yarn, just before the power looms. And inversely, even if your overall economy stays behind global growth trends, but you at least have a sustainable AI-related sector, that might be all you need to motivate the developers and their national overlords to provide the AI capabilities at large. That’s not very aspirational, but survivable — especially if AI has deflationary effects.

Middle powers also need strategic leverage even beyond these economic entanglements to make sure they aren’t entirely at the geopolitical mercy of their associated great AI power. Economic incentives are fine and sufficient as long as AI remains a predominantly civilian technology, where you can trade goods and leverage comparative advantage in a way that ultimately ensures mutually beneficial proliferation. But that’s only part of the story. Even though obvious security applications have failed to manifest so far, trends point at increasing securitisation. This can lead to a world where countries need access to near-frontier capabilities to stave off AI-powered aggression, but these capabilities are safeguarded by state-run projects in great AI powers. These state-run projects might not be too keen on sharing — recent policy writing from the US that second-guesses proliferation even through the Five Eyes intelligence alliance should send shivers down many middle powers’ spines. With all the Manhattan project talk abound, even a lot of close US allies would do well to remember how much wringing it took for nuclear proliferation; and what the US might have learned from it.

In that setting, a middle power might need to make a strategic contribution that gives allied great powers a reason to provide access to leading security-relevant models, participation in the compute supply chain; and that reason should be compelling enough that great powers can’t unilaterally leverage the middle powers’ dependency on their models and compute.

Here’s What I Think Middle Powers Should Do

To start, I don’t think the way to do that is for middle powers to get ambitious about building AI models themselves. The point has been made elsewhere — in short: In almost all middle powers, the infrastructural gap to the US and China is huge: in terms of energy, compute, and talent, and competition for the latter two is at an all-time high. This is made worse by the shaky economic situation and subsequent investment limitations of many of these powers, and the comparative lack of venture capital that could alleviate that. If catching up was an option, it would obviously be advisable – but currently, it does not seem realistic.

With recent news, you might think that open source can bail you out after all — but that seems risky to count on: Fast-following tends to be easier early in paradigms, securitisation makes top-tier proliferation through open source less likely, and the shift to inference scaling places large infrastructural requirements even on the use of open-source models.

So what should middle powers do instead? In short: Be valuable on bottlenecks that arise when intelligence becomes cheaper and cheaper. Tyler Cowen says: “[Massive increases in intelligence] just means the other constraints in your system become a lot more binding, that the marginal importance of those goes up”. That can be leveraged.

On the economic level, that means scanning your economy for bottlenecks for translating AI capability gains to GDP growth. Then, make a substantial contribution to widening them.

As to what these bottlenecks will be: That’s a whole area of contentious research I hope to see much more of. For starters, reasonable speculation likely converges on some general takeaways: Don’t over-index on knowledge economy services that can likely be replaced or moved to the great powers at low costs (instead of enhanced). Instead, sit at intersections with natural limitations to AI deployment & automation. Much more has been said elsewhere on what that might be. Generally, the production of (often physical) goods either upstream or downstream from AI use seems constraining and relevant. Specific versions of leveragable bottlenecks to AI-powered growth might be:

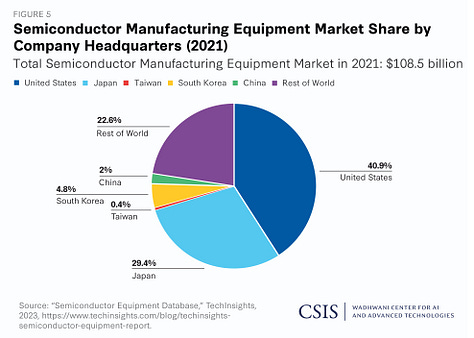

Strategic elements of the compute supply chain, from raw materials to lithography — the Netherlands and Taiwan are the obvious examples.

Novel & privileged sources of data, esp. around industrial and strategic applications. Israel, Singapore, and some smaller sectors of most industrial states do well on this.

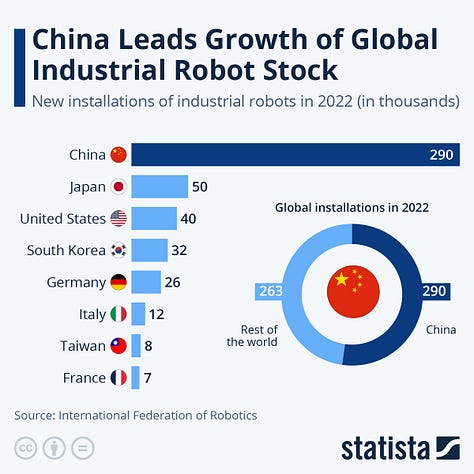

Robotics & general embodiment; anything that ramps up the ability of AI systems to interface with the real world. South Korea and Japan do well on this.

Industrial capacity writ large: Many avenues to the purported economic and social benefits of AI run through physically building things. Someone actually has to make the RNA vaccines and superweapons. Germany and Japan are good at this, but e.g. Italy and India also have a decent share.

Specifically: automated production quality that might reduce friction for implementing AI-driven efficiency gains & shortens iteration cycles for experimenting on AI-generated proposals.

And: sufficiently digitized production that enables comprehensive and real-time data collection for AI-powered optimization, quickfire iteration & troubleshooting.

A lot of people in AI will tell you that US- and China-based capacities in all these areas will also be boosted by advanced AI, stripping middle powers of niches. The long history of comparative advantage and the starting positions suggest that’s not a given — especially when both great powers are focused on an AI race.

This is not about country specifics, and the economic policy pathways to realize this are a contentious issue themselves. But for a short illustration, imagine a country like Germany or Japan refocused policy efforts to get aggressive about further automating its still-substantial industrial capacity, provide the energy to scale it up quickly if needs be, and removed regulatory constraints on leveraging the troves of data that already exist – and then implemented highly advanced AI systems on top of that, buying intelligence at cheap market prices. I’m very optimistic that the returns to the quality and quantity of German or Japanese exports would be remarkable – and beyond most conceivable alternative plans with similar political costs. In simple summary: When getting ready for increasing AI capabilities, you want to be ready to turn cheap intelligence into valuable growth.

Here’s How That Helps on Strategy & Security

Thinking in bottlenecks for intelligence also provides the necessary strategic leverage. Take the now-classic great power competition scenario for ~2028, where China and the US both chase increasing AI capabilities. How does actually prevailing in that scenario realistically look like for either of the great powers? Sure, there is some notion of capability progress where the leader gets to an ASI system that does something mind-blowing like deploy nanobots or provide a skeleton key to the world’s infrastructure; but reasonable people suggest that this might be quite infeasible.

So I take it the more realistic, albeit less exciting, view is that getting to higher and higher AI capabilities mostly serves as a force multiplier: It makes your existing forces more and more effective, it helps you get a lot more out of your industrial capacity, it accelerates your R&D a lot, etc. Winning, in that sense, just means that your economy and military gets a lot more bang for its buck due to some distribution of efficiency gains. If that is true, and the great powers chase greater increases of the force multiplier, then there is comparative advantage in middle powers in contributing to the multiplicand: Increasing the basis of capacity that is made more effective and efficient by the increasingly abundant intelligence.

Practically speaking: If your AGI makes all industrial output you apply it to many times as valuable, then any allies that add industrial capacity get you a lot further in your great power competition. And if the US is already heavily invested into winning an AI race, it stands to reason that it won’t also effortlessly outpace its major allies on industrial capacity — it’s not that far ahead in terms of growth and GDP just yet. To make this clear by the inverse point: If the US only had its own industrial capacity to leverage, its models would have to be quite a bit better to make up for the gap to Chinese capacity already. And if it ever fell behind, things would look pretty dicey pretty quickly. Sure, maybe AGI helps you rapidly ramp up your capacity. But maybe it doesn’t. Or maybe you won’t win decisively. Maybe the three months of AGI-advantage don’t ramp it up enough. Would you bet the house on that if you were sitting on the NSC?

So, back to middle powers: Imagine a situation in which the US is barely ahead in a tight capability race and broader geopolitical competition, and wants to cement that position. In this situation, Germany or Japan could be able to offer a ‘superchargeable’ industry to the US to capitalize on capability gains through; countries like the Netherlands could offer even more comprehensive access and AI-powered improvements to compute supply, etc. This might be a sufficiently meaningful contribution to geopolitical competition that the US would be motivated to trade for it: By extending its frontier-powered security umbrella and guaranteeing continued access to compute and civilian model use. And if the middle powers caught up mysteriously, or AI turned out a nothingburger after all, they’d still be left with a stronger strategic position tha before. Great powers will not extent their hand out of the goodness of their heart, so it’s best to think about what one can trade.

Here’s What’s Next & How To Sell It

In summary, here’s a quick sketch of a roadmap for a middle power that’s actually interested in avoiding falling behind on autopilot:

Don’t get into a race you can’t win. Get enough compute to run crucial onshore inference, that’s it.

Find something in your economy that:

Is not directly AI

Bottlenecks returns of rapid AI progress to real-world effects

Can be argued to have some strategic dimension in great power competition

On economic policy, strongly prioritize making these sectors competitive and ‘AI-compatible’. This can include getting some compute for inference, but should mostly not be about AI per se. Think in months, not decades. Go into debt if you have to.

On foreign policy, leverage these capabilities in negotiations with an AI great power to get good, but realistic, terms to partake in compute supply, model access and security umbrellas. Do this as early as you can – the negotiation terms will get worse as AI gets bigger.

I can’t speak to political dynamics everywhere to the same extent, but by and large I think this sort of plan has the big advantage of a favourable political economy. In short: All this does not require a visible pivot to a tech- or AI-driven economic model. It can be framed as benefitting powerful incumbents, existing political coalitions, and plausibly at-risk demographics whose transformation shocks are being preempted. On the level of general political sentiment — or ‘vibes’ —, the plan can be framed as a culturally and politically continuous doubling down on existing strengths, while being entirely responsive to a paradigm shift.

Unlike more directly AI-related strategies, this roadmap avoids being characterised as speculative, risky, or captured by industry — an historically likely fate for much policy that tries to address upcoming transformation. Add to this the plenty of individual incentives to deny the reality of disruptive AI effects, and you see how easily political resistance can be motivated. Any alternative plan for middle powers that puts a more overtly AI-focused push, whether that’s development at the frontier or downstream deployments below it, at its center is much more susceptible to these political hurdles. Given the currently very low uptake of ‘taking AI seriously’ in most of these middle powers, I think this political economy aspect matters.

AI middle powers are in big trouble and risk being left behind on economic benefits and security applications of frontier capabilities. While they might not catch up, they still have options: They can find sectors that benefit from rapid AI progress, equip them for that progress, and leverage them as comparative advantage in negotiations with great powers. I think this is a somewhat robust plan with a somewhat favourable political economy that might be worth considering.